The Super Programmer

Existential Rambling

February 16th, 2024

OpenAI, the legendary AI company of our time, has just released their new product, Sora, an AI model capable of generating one minute of mind-blowingly high-quality video content given a text prompt. I’m scrolling through Twitter, watching the sample outputs, feeling extremely jealous of those who have had the opportunity to contribute to building it, and I also feel a bit of emptiness. I’m thinking about how this would affect the video industry and Hollywood. How many people’s work will become meaningless just after this? And for me, there is also a disturbing question:

Does it make any sense for me to write this book at all? If a machine is able to generate convincingly real videos, how hard would it be for it to write a tutorial book like this? Why would I put any effort in if anyone is able to generate their customized masterpiece in seconds?

I know this will happen someday, and I'm not against it. I truly appreciate the fact that I was born in an era when the AI revolution is happening. AI will someday be able to generate better content than we can, and many of our efforts will simply become unnecessary. In my opinion, that future is not far off.

Anyway, my temporary existential crisis in this moment made me immediately pick up my phone and express my heartbreak and sadness in the very first section of this book. Perhaps this is the only way I could convince myself to continue writing a book: by pouring some real human soul into it.

February 25th, 2024

A few days have passed, and I rarely see people talking about Sora. It’s starting to become normal, I guess. People who were excited at first are now finding Sora boring. I just realized there is something called “Hedonic Adaptation.” It argues that humans will always return to a stable level of happiness, despite positive or negative changes in their lives. This happens with the advancement of technology all the time. At first, we are all like "woah," and after some time, everything already gets boring. Although this may seem unfair, it drives us humans to progress because, you know, being stuck is boring.

I can’t imagine how many "woah" moments humans have had throughout history. Things we have in our hands right now seem like miracles for people living 300 years ago, but in our days, old technology seems basic and trivial.

I really hope this book will be able to fulfill its purpose: to make the interested reader appreciate what it took for us to reach this level of advancement in the computer-driven technology.

Preface

The book you're about to read is the result of exploring various areas in computer science for over 15 years. The idea of writing this book began around 8 years ago when I was a high-school student, getting ready for college. At that time, my focus was on learning computer graphics and 3D rendering algorithms. The idea of generating 3D images by manually calculating the raw RGB values of pixels intrigued me, and I'm the kind of person who wants to teach everyone the exciting things I have learned. Unfortunately, not everyone I knew at that time was interested in learning low-level stuff like this. Instead of trying to teach what I had learned, I decided to write a book. I was confident I'd have an audience, as many programmers worldwide are eager to learn more than just web-application development (a common career path among software engineers).

So, I started writing the book, named it "Programming the Visuals," and made a lot of progress! Not sure what happened exactly, but I got a bit discouraged after finding it hard to fill a book with content. I found myself explaining everything I wanted to explain in less than 40 pages, and well, 40 pages are not enough for a technical book. I didn't want to clutter my book with all the details of different rendering algorithms. I just wanted to explain the core idea, in very simple words and with easy-to-understand code examples. My goal was to introduce this specific field of computer science to people, hoping they would get encouraged enough to learn anything else they'd need by themselves.

My computer graphics book remained untouched for years, during which I took a bachelor's degree in computer engineering and started to learn about other interesting fields of computer science. University was one of the places where I got clues on what I have to learn next, although I have to admit, most of my knowledge was gained by surfing around the internet and trying to make sense of things by myself. University professors do not really get deep into what they teach (Unless you are from a top-notch university, I'm not talking about the exceptions). University courses though will give you a lot of headlines that you'll have to get deeper in them all by yourself, and that makes sense too; you can't expect to get outstandingly good in everything in a relatively short period of time. Chances are you will get specialized knowledge in only one of the fields of computer science.

The fact that success often comes from getting deep in only one of the many fields of computer science didn't stop me from learning them (It did take a long time though). After getting comfortable with generating pixels, I started to learn about building computers from bare transistors, writing software that can compose/decompose sounds, crafting neural networks from scratch, implementing advanced cryptographic protocols, rolling out my own hobby operating system, and all kind of the nerdy things the average software developer, avoids learning (Or just have not had the opportunity to do so).

The result? I transformed myself from an average programmer who could only build web-applications into a programmer that knows the starting point of building any kind of computer software and hopefully can help get the progress of computer-related technology back on track in case a catastrophic event happens on Earth and all we have is gone (This is something that I think about a lot!).

After expanding my knowledge by learning all these new concepts, I felt it was time to extend my 40-page computer graphics book into a more comprehensive work, covering additional fundamental topics. The result is the book you're reading now!

Support

If you enjoy what I'm writing and would like to support my work, I would appreciate crypto donations! I attempted to distribute my work through computer programming publishers, but the consensus was that tutorials covering a wide range of unrelated topics wouldn't sell well. Therefore, I have decided to continue TSP as an open-source hobby project!

- Bitcoin:

bc1qjvcsjt57des7j4runchxalksueqlxgadx7fwll - Ethereum:

0x1a34a6763f55887444ffbd8f8d4fae8197478675

Also, I'm thinking of allowing anyone to contribute in writing and editing of this book, so feel free to submit your shiny PRs!

GitHub: https://github.com/keyvank/tsp

Intro

Legos were my favorite toys as a kindergarten student. They were one of the only toys I could use to build things that didn't exist before. They allowed me to bring my imagination into the real world, and nothing satisfies a curious mind more than the ability to build unique structures out of simple pieces. As I grew up, my inner child's desire to create took a more serious direction.

My journey into the fascinating world of computer programming began around 20 years ago, when I didn't even know the English alphabet. I had a cousin who was really into electronics at that time (surprisingly, he is in medicine now!). He had a friend who worked in an electronics shop, and he used to bring me there. We would buy and solder DIY electronics kits together for fun. Although I often heard the term "programming" from him, I had no idea what it meant until one day when I saw my cousin building the "brain" of a robot by typing characters on a screen and programming something he called a "microcontroller". The process triggered the same parts of my brain as playing with Lego pieces.

I didn't know the English alphabet then, so I just saw different symbols appearing on the screen with each keystroke. The idea that certain arrangements of symbols could cause unique behaviors in the robot he was building, just blew my mind. I would copy and paste codes from my cousin's programming-tutorial book onto his computer just to see the behaviors emerge when I input some kind of "cheat-code". I began to appreciate the power of characters. Characters became my new Lego pieces, but there was an important difference: it was impossible to run out of characters. I could literally use billions of them to build different behaviors.

After around 20 years, I still get super excited when I build something I find magnificent by putting the right symbols in the right order. I was curious enough to study and deeply understand many applications of computers that have made significant impact in our everyday life but nobody gives them the attention they deserve these days, so I decided to gather all these information in one place and write this book.

When I started writing this book, I was expecting it to be a merely technical book with a lot of codes included, targeting only programmers as its audience, but I ended up writing a lot of history, philosophy and even human anatomy! So the book could be a good read for non-programmers too! That said, I should warn you: it’s crucial to have some programming knowledge to fully appreciate and understand the content!

The Super Programmer is all about ideas, and how they have evolved through time, leading to the impressive technology we have today. I wanted this book to have least dependency to technologies, so that the codes do not get obsolete over time. I first thought of writing the codes in some pseudo-coding language, but I personally believe that pseudo-codes are not coherent enough, so I decided to choose and stick with a popular language like Python, which as of today, is a programming language that is known by many other engineers and scientists.

Who is this book for?

This book is for curious people who are eager to learn some of the most fundamental topics of computer and software engineering. The topics presented in this books, although very interesting in opinion, are some of the most underrated and least discussed fields of computer programming. This book is about the old technology, the technology we are using everyday, but we refuse to learn and extend as an average engineer. The literature around each of those topics is so large that, only specialists will ever be motivated to learn them.

The Super Programmer is for the programmers who don't want to limit their knowledge and skills on a very narrow area of software engineering. The Super Programmer is for programmers who can't sleep at night when they don't exactly understand how something in their computer works.

There is a famous quote, attributed to Albert Einstein, which says: 'You do not really understand something unless you can explain it to your grandmother.'. Not only I emphasize on this quote, I would like to extend this quote and claim that, you don't understand something unless you can explain it to your computer. Computers are dumb. You have to explain them every little detail, and you can't skip anything. If you are able to describe a something to a computer in a way that it can correctly simulate it, then you surely understand that thing!

Computers give you the ability to test and experiment stuff without actually owning the physical version of them. You can literally simulate an atomic bomb in your computer and throw it in your imaginary world to see what happens. You can simulate a rocket that travels to Mars, as a tiny step, towards your dream of opening a space company. You can describe a 3D environment, and try to understand how it feels to be there, by putting your imaginary eyes in there and calculating what it will perceive, as a computer image. Computers let you to experiment anything you can ever imagine without spending anything other than some of your time and talent.

The Super Programmer is going to show you "what can be done", and not "how can be done". You are not going to learn data-structure and algorithms here, but you are going to learn how you can embrace your creativity by using simple tools you already know. Thus in many parts of this book, we are going to apply the simplest and least-efficient solutions possible, because it's a better way to learn new things.

Some might argue that not all software engineers will ever need to learn any of the topics taught in this book. That's partially true. As an average programmer, you are not going to use any of these knowledge in your everyday work. Even if you do, you are probably not going to get creative and make anything substantially new out of them. Since the book is about the old technology, most of the topics discussed in this book are already studied and developed as much as they could be.

So why are we doing this? There are several reasons:

- The problem-solving strategies and patterns you’ll learn in this book can be applied directly to your everyday work!

- This book takes you on a journey through the entire landscape of technology, and by the end, nothing will feel intimidating.

- And most importantly, it’s fun!"

First Principle Thinking

The Super Programmer is all about reinventing the wheel. Reinventing the wheel is considered a bad practice by many professionals, and they are somehow right. Reinventing the wheel not only steals your focus from the actual problem you are going to solve, but also there is a very high chance you will end up with a wheel that is much worse than wheels already made by other people. There have been people working on wheels for a very long time, and you just can't beat them with their pile of experience. Reinventing the wheel can be beneficial in two scenarios:

- Learning: If you want to learn how a wheel works, build it yourself, there isn't a shortcut.

- Full control: If wheels are crucial part of the solution you are proposing for a problem, you may want to build the wheels yourself. Customizing your own design is much easier than customizing someone else’s work.

If you want to build a car that outperforms every other on the market, you won’t settle for tweaking existing engines. You’ll need to design one yourself. Otherwise, you’ll only end up with something that is only 'slightly better', and 'slightly better' isn’t enough to convince anyone to choose your car over a trusted brand.

Reinventing the wheel is a form of First Principle Thinking. This approach involves questioning all your assumptions and building solutions from the ground up by reassembling the most fundamental truths: the first principles.

First principle thinking is a crucial trait that sets successful people apart. It gives you more control over the problems you're solving because it allows you to think beyond conventional solutions.

Consider an extraordinary chef. He doesn't just know how to cook different dishes; he understands the raw ingredients, their flavors, and how they interact. He knows the "first principles" of cooking. This deep understanding enables him to create entirely new dishes—something a typical cook can’t do.

I hope I've made my point clear. With The Super Programmer, you'll gain all the ingredients you need to craft your own brilliant software recipes!

Computer Revolution

Believe it or not, the world has become far more complex than it was 500 years ago. We live in an era where specializing in a narrow, specific field of science can take a lifetime. With billions of people in the world, it’s nearly impossible to come up with an idea that no one has ever thought of before. In many ways, innovation has become less accessible. There are several reasons for this:

-

We are afraid to innovate. Five hundred years ago, people didn’t have daily exposure to tech celebrities or compare themselves to others. They weren’t afraid of creating something unique, no matter how imperfect. They didn’t worry about “mimicking” or being outdone. They simply kept their creativity flowing, making, writing, and painting—without fear of comparison. This is why their work was often so original, unique, and special.

-

We don't know where to start. Back then, it was possible for someone to learn almost everything. There were polymaths—people who could paint, write poetry, design calendars, expand mathematics, engage in philosophy, and cure ailments, all at once. Famous examples include the Iranian philosopher and mathematician Omar Khayyam and the Italian Renaissance genius Leonardo Da Vinci. Today, however, the vast amount of knowledge being produced makes it impossible for anyone to be a true polymath. This doesn't mean humanity has declined; rather, the sheer volume of knowledge is overwhelming, making it hard to know where to focus or even begin.

This complexity leads to confusion. Ironically, if you asked someone from 100 years ago to design and build a car for you, they might be more likely to succeed than someone today. Early cars were simpler, and this simplicity gave people the confidence to guess how things worked and experiment. In contrast, in our era, guessing how something works often feels like the wrong approach.

The computer software revolution changed the "creativity industry". Programming was one of the first forms of engineering that required very little to get started. You don’t need a huge workshop or an extensive understanding of physics to create great software. You won’t run out of materials because software is made of something you can never run out of: characters! All you need is a computer and a text editor to bring your ideas to life. Unlike building physical objects, in the world of programming, you know exactly what the first step is!

> Hello World!

Synchronous vs Asynchronous Engineering

As someone who has only created software and never built anything sophisticated in the physical world, I find engineering physical objects much more challenging than developing computer programs.

I’ve often heard claims that software engineering is fundamentally different from other, more traditional fields of engineering like mechanical or electrical engineering. However, after talking with people who build non-software systems, I’ve come to realize they also find software development difficult and non-trivial.

After some reflection, I decided to categorize engineering into two broad fields: Synchronous vs Asynchronous.

Synchronous engineering involves designing solutions based on orderly, step-by-step subsolutions. A classic example of this is software engineering. When building a program, you arrange instructions (sub-solutions) in a specific sequence, assuming each instruction will work as expected. Similarly, designing a manufacturing line follows this approach—new subsolutions don’t interfere with the existing ones. You can add new steps or components without affecting the previous ones, and the system remains intact.

On the other hand, asynchronous engineering doesn’t follow a fixed order. The final solution is still composed of subsolutions, but these subsolutions operate simultaneously rather than sequentially. Examples include designing a building, a car engine, or an electronic circuit. In these fields, you must have a comprehensive understanding of how all the parts fit together, because changes in one area can disrupt the entire system. Adding a new component can break existing elements, unlike in software, where changes often don’t have the same kind of direct impact.

The Super Programmer aims to turn you into an engineer capable of building synchronous systems!

A quick glance at the chapters

The book is divided into five chapters, each designed to build upon the last, guiding you from foundational concepts to more complex ideas.

We begin by exploring the beauty of cause-and-effect chains, demonstrating how simple building blocks—like Lego pieces—can come together to form useful and intriguing structures. We'll dive into the history of transistors, how they work, and how we can simulate them using plain Python code. From there, we’ll implement basic logic gates by connecting transistors, and progressively build more complex circuits such as adders, counters, and eventually, a simple programmable computer. After constructing our computer, we’ll try to give it a "soul" by introducing the Brainfuck programming language. You’ll learn how to compile this minimalistic language for our computer and design impressively complex programs using it.

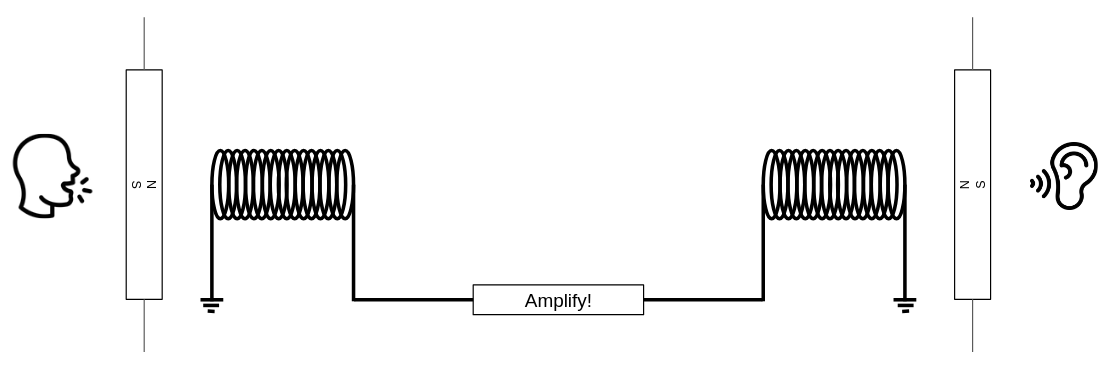

In the 2nd and 3rd chapters, we explore two of the most important human senses: hearing and vision. We’ll begin by examining the history of how humans invented methods to record visual and auditory information—through photos and tapes—and experience them later. We’ll then explore how the digital revolution forever changed the way we record and store media. By delving into simple computer file formats for images and audio, we’ll even create our own files. You'll learn how to generate data that can "please" both our ears and eyes.

Chapter 4 takes us into the fascinating world of artificial intelligence. We’ll draw parallels between biological neurons and tunable electronic gates, exploring how calculus and differentiation can be used to "train" these gates. You’ll gain hands-on experience by building a library for training neural networks via differentiating computation graphs. We’ll then explore some of the most important operations in neural-network models that understand language, and you’ll have the opportunity to implement your own basic language model.

In Chapter 5, we shift focus to how mathematics is used to bring trust into the digital world. We’ll begin by studying the history of cryptography and how digital documents are encrypted and signed using digital identities. We’ll examine the electronic-cash revolution and how math can protect us from malfeasance and help us navigate around government restrictions. You’ll learn how to prove knowledge without revealing the underlying information—an important concept for building truly private cryptocurrencies.

The final chapter is all about how computers and software can interact with the physical world. We’ll dive into physics, specifically classical mechanics, and explore how these laws can be used to simulate the world in our computers or to design machines capable of sending humans to Mars. We’ll also take a deep dive into electronics—the often-underestimated field that underpins modern computing. Many computer engineers, even the best ones, struggle to fully understand electronics, but it’s essential to grasp it in order to build effective systems. Throughout this chapter, we’ll use physics engines and circuit simulators to solidify these concepts.

What do I need to know?

As mentioned earlier, while this book focuses on concepts rather than specific implementations, I chose to write the code in a real programming language instead of using pseudocode. Pseudocode assumes that the reader is human and lacks the structural coherence of an actual programming language, which is crucial for the types of ideas we explore here.

I found Python to be a great choice, as it is one of the most popular languages among developers worldwide. I think of Python as the "English" of the programming community—easy to understand and write. While Python may not always be the most efficient language for the topics covered in this book, it is one of the easiest to use for prototyping and learning new concepts.

That said, you'll need to be familiar with the basics of Python. Some knowledge of *NIX-based operating systems may also be helpful in certain sections of the book.

Without a doubt, people are more invested in things they’ve built themselves. If you plan to implement the code in this book, I highly recommend doing it in a programming language other than Python. While Python is an excellent tool, using it might make you feel like you’re not doing anything new—you may end up just copying and pasting what you read in the book. On the other hand, rewriting the logic in another language forces you to deeply translate the concepts you’ve learned, leading to a better understanding of what’s happening. It will also give you the rewarding feeling of creating something from scratch, which can boost your motivation and dopamine levels!

How did I write this book?

As someone who had never written a book before, I found the process quite unclear. I wasn’t sure which text-editing software or format would make it easiest to convert my work into other formats. Since I needed to include a lot of code snippets and math equations, Microsoft Word didn’t seem like the best choice (at least, I wasn't sure how to do it efficiently with a word processor like Word). On top of that, I’m not a fan of LaTeX—its syntax feels cumbersome, and I wasn’t eager to learn it. My focus was on writing the actual content, not wrestling with formatting.

That’s when I decided on Markdown. It’s simple to use, and there’s plenty of software that can easily convert .md files to .html, .pdf, .tex, and more. Plus, if I ever needed to automate custom transformations of my text, Markdown is one of the easiest formats to parse and manipulate.

I wrote each chapter in separate .md files, and then created a Makefile to combine them into a single PDF. To do this, I used a Linux tool called pandoc, which made the conversion process seamless.

Here is how my Makefile script looked like:

pdf:

pandoc --toc --output=out.pdf *.md # Convert MD files to a PDF file

pdfunite cover.pdf out.pdf tsp.pdf # Add the book cover

pdftotext tsp.pdf - | wc -w # Show me the word-cound

The --toc flag generated the Table of Contents section for me automatically (Based on Markdown headers: #, ##, ...), and I also had another .pdf file containing the cover of my book, which I concatenated with the pandoc's output. I was tracking my process by word-count.

Compute!

Music of the chapter: Computer Love - By Kraftwerk

Which came first? The chicken or the egg?

Imagine, for a moment, that a catastrophic event occurs, causing us to lose all the technology we once had. All that remains is ashes, and our situation is similar to humanity's early days millions of years ago. But there's one key difference: we still have the ability to read, and fortunately, many books are available that explain how our modern technology once worked. Now, here's an interesting question: How long would it take for us to reach the level of advancement we have today?

My prediction is that we would get back on track within a few decades. The only reason it might take longer than expected is that we would need tools to build other tools. For example, we can't just start building a modern CPU, even if we have the specifications and detailed design. First, we would need to rebuild the tools required to create complex electronic circuits. If we are starting from scratch, as we've assumed, we would also need to relearn how to find and extract the materials we need from the Earth, and then use those materials to make simpler tools. These simpler tools would then allow us to create more advanced ones. Here's the key point that makes all of our technological progress possible: technology can accelerate the creation of more technology!

Just like the chicken-and-egg paradox, I’ve often wondered how people built the very first compilers and assemblers. What language did they use to describe the first assembly languages? Did they have to write early assembler programs directly in 0s and 1s? After some research, I discovered that the answer is yes. In fact, as an example, the process of building a C compiler for the first time goes like this:

- First, you write a C compiler directly in machine code or assembly (or some other lower-level language).

- Next, you rewrite the same C compiler in C.

- Then, you compile the new C source code using the compiler written in assembly.

- At this point, you can completely discard the assembly implementation and treat the C compiler as if it were originally written in C.

See this beautiful loop? Technology sustains and reproduces itself, which essentially means technology is a form of life! The simpler computer language (in this case, machine-assembly) helped the C compiler emerge, but once the compiler was created, it could stand on its own. We no longer need the machine-assembly version, since there’s nothing stopping us from describing a C compiler in C itself! This phenomenon isn’t limited to software—just like a hammer can be used to build new hammers!

Now, let's return to our original question: Which came first, the chicken or the egg? If we look at the history of evolution, we can see that creatures millions of years ago didn’t reproduce by laying eggs. For example, basic living cells didn’t reproduce by laying eggs; they simply split in two. As living organisms evolved and became more complex, processes like egg-laying gradually emerged. The very first chicken-like animal that started laying eggs didn’t necessarily hatch from an egg. It could have been a mutation that introduced egg-laying behavior in a new generation, much like how a C compiler could come to life without depending on an assembly implementation.

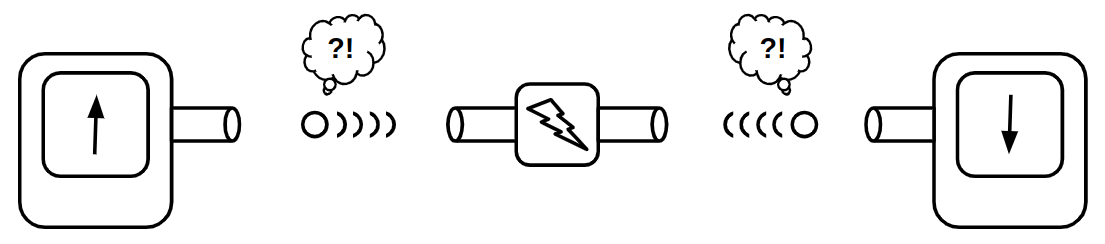

When the dominos fall

If you ask someone deeply knowledgeable about computers how a computer works at the most fundamental level, they’ll probably start by talking about electronic switches, transistors, and logic gates. Well, I’m going to take a similar approach, but with a slight twist! While transistors are the basic building blocks of nearly all modern computers, the real magic behind what makes computers "do their thing" is something I like to call "Cause & Effect" chains.

Let’s begin by looking at some everyday examples of cause-and-effect scenarios. Here’s my list (feel free to add yours as you think of them):

- You press a key on your computer → A character appears on the screen → END

- You push the first domino → The next domino falls → The next domino falls → ...

- You ask someone to open the window → He opens the window → END

- You tell a rumor to your friend → He tells the rumor to his friend → ...

- You toggle the switch → The light bulb turns on → END

- A neuron fires in your brain → Another neuron gets excited and fires → ...

Now, let’s explore why some of these cause-and-effect chains keep going, setting off a series of events that lead to significant changes in their environment, while others stop after just a few steps. Why do some things trigger a cascade of actions, while others don’t?

There's an important pattern here: The long-lasting (i.e., interesting) effects emerge from cause-and-effect chains where the effects are of the same type as the causes. This means that these chains become particularly interesting when they can form cycles. For example, when a "mechanical" cause leads to another "mechanical" effect (like falling dominos), or when an "electrical" cause triggers another electrical effect (like in electronic circuits or the firing of neurons in your brain).

The most complex thing you can create with components that transform a single cause into a single effect is essentially no different from a chain of falling dominoes (or when rumors circulate within a company). While it’s still impressive and has its own interesting aspects, we don’t want to stop there. The real magic happens when you start transforming multiple causes into a single effect—especially when all the causes are of the same type. That’s when Cause & Effect chains truly come to life!

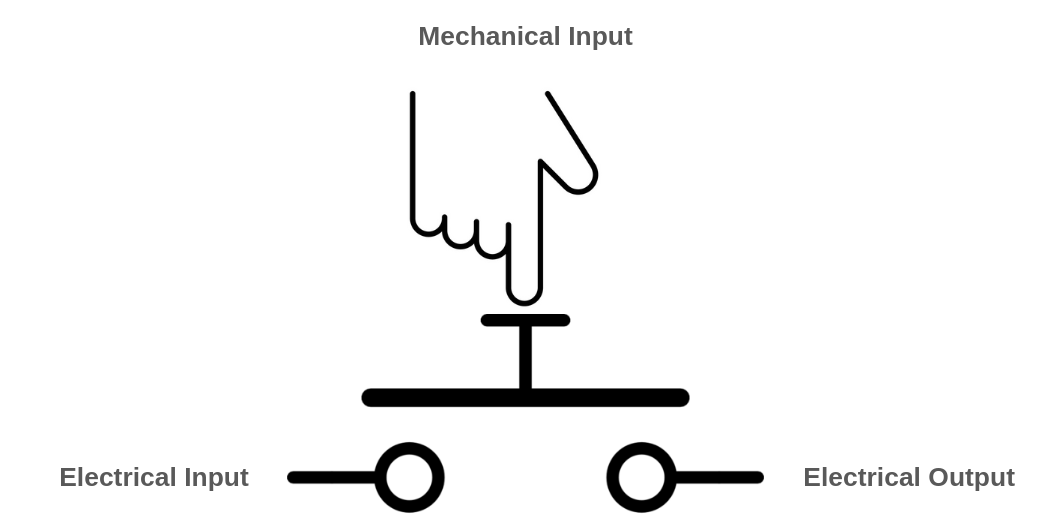

A simple example of a multi-input/single-output component is a switch. A switch controls the flow of an input to an output through a third, controlling input. Light switches, push buttons, and faucets (which control the flow of water through a pipe) are all examples of switches. However, in these cases, the type of the controlling input is different from the other inputs and outputs. For instance, the controlling input of a push button is mechanical, while the others are electrical. You still need a finger to push the button, and the output of the push button cannot be used as the mechanical input of another button. As a result, you can't build domino-like structures or long-lasting cause-and-effect chains using faucets or push buttons in the same way you would with purely similar types of inputs and outputs!

{ width=350px }

{ width=350px }

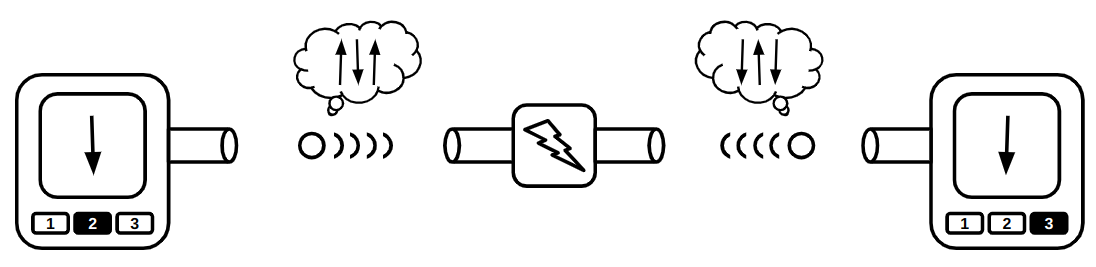

A push-button becomes more interesting when its controlling input is electrical rather than mechanical. In this case, all of its inputs and outputs are of the same type, allowing you to connect the output of one push-button to the controlling input of another.

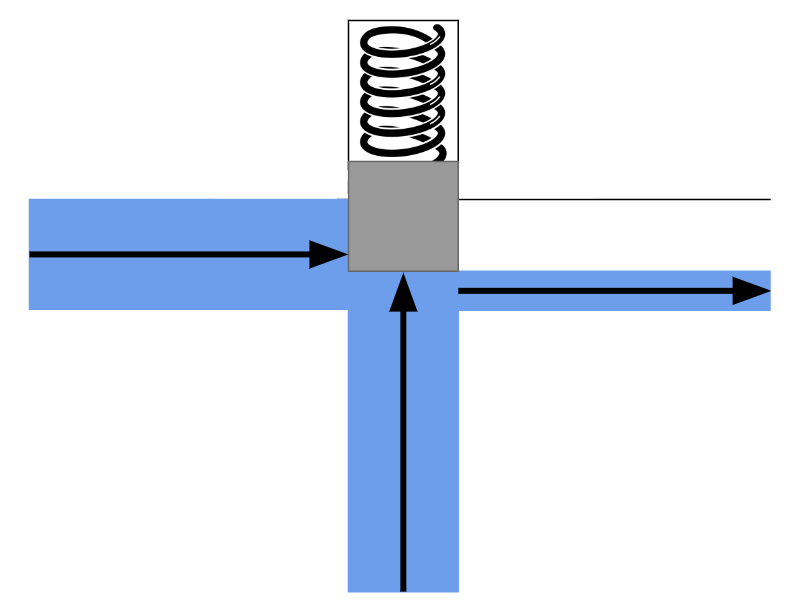

Similarly, in the faucet example, you could design a special kind of faucet that opens with the force of water, rather than requiring a person to open it manually with their hands. In the picture below, when no water is present in the controlling pipe (the pipe in the middle), the spring will push the block down, preventing water from flowing from the left pipe to the right pipe. However, when water enters the controlling pipe, its pressure will push the block up, creating space for the water to flow from the left pipe to the right pipe.

{ width=350px }

{ width=350px }

The controlling input doesn't have to sharply switch the flow between no-flow and maximum-flow. Instead, the controller can adjust the flow to any level, depending on how much the controlling input is pushed. Additionally, the controlling input doesn't need to be as large or powerful as the other inputs/outputs. It could be a thinner pipe that requires only a small force of water to fully open the valve. This means you can control a large and powerful water flow using a much smaller input and less force.

There’s also a well-known name for these unique switches: transistors. Now you can understand what "transistor" literally means—it’s something that can "transfer" or adjust "resistance" on demand, much like how we can control the resistance of the water flow in a larger pipe using a third input.

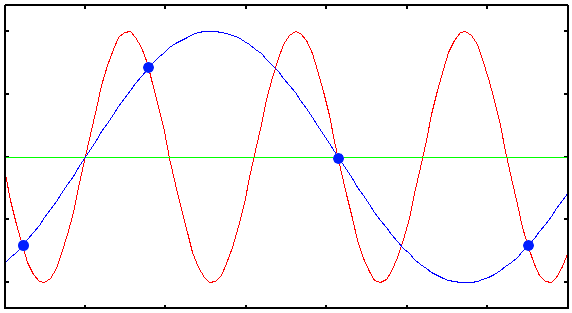

The most obvious use case for a transistor is signal amplification. Let’s explore this with an example: imagine a small pipe through which water flows in a sinusoidal pattern. Initially, there is no water, then the flow gradually increases to a certain level, and finally, it slowly decreases back to zero (a pattern similar to what you might see in a water show!). Now, we connect this pipe to the controlling input of the water transistor we designed earlier. Can you guess what comes out from the output of this transistor? Yes, the output is also a sinusoidal flow of water. The difference is that it’s much more powerful than the small pipe. We’ve just transferred the sinusoidal pattern from a small water flow to a much more powerful one! Can you imagine how important this is? This concept forms the core of how devices like electric guitars and sound amplifiers work!

That’s not the only use case for transistors, though. Because the cause-and-effect types of the inputs and outputs are the same, we can chain them together—and that’s all we need to start building machines that can compute things (we’ll explore the details soon!). These inputs don’t necessarily have to be electrical; they can be mechanical as well. Yes, we can build computers that operate using the force of water!

A typical transistor in the real world is conceptually the same as our imaginary faucet described above. Substitute water with electrons, and you have an accurate analogy for a transistor. Transistors, the building blocks of modern computers, allow you to control the flow of electrons in a wire using a third source of electrons!

Understanding how modern electron-based transistors work involves a fair bit of physics and chemistry. But if you insist, here’s a very simple (and admittedly silly) example of a push-button with an electrical controller: Imagine a person holding a wire in their hand. This person presses the push-button whenever they feel electricity in their hand (as long as the electricity isn’t strong enough to harm them). Together, the person and the push-button form something akin to a transistor, because now the types of all inputs and outputs in the system are the same.

It might sound like the strangest idea in the world, but if you had enough of these "transistors" connected together, you could theoretically build a computer capable of connecting to the internet and rendering webpages!

Why did people choose electrons to flow in the veins of our computers instead of something like water? There are several reasons:

- Electrons are extremely small.

- Electrical effects propagate extremely fast!

Thanks to these properties, we can create complex and dense cause-and-effect chains that produce amazing behaviors while using very little space, like in your smartphone!

Taming the electrons

In the previous section, we explored what a transistor is. Now it’s time to see what we can do with it! To quickly experiment with transistors, we’ll simulate imaginary transistors on a computer and arrange them in the right order to perform fancy computations for us.

Our ultimate goal is to construct a fully functional computer using a collection of three-way switches. If you have prior experience designing hardware, you're likely familiar with software tools that allow you to describe hardware using a Hardware Description Language (HDL). These tools can generate transistor-level circuits from your designs and even simulate them for you. However, since this book focuses on the underlying concepts rather than real-world, production-ready implementations, we will skip the complexity of learning an HDL. Instead, we'll use the Python programming language to emulate these concepts as we progress. This approach will not only deepen our understanding of the transistor-level implementation of something as intricate as a computer but also shed light on how HDL languages transform high-level circuit descriptions into collections of transistors.

So let's begin and start building our own circuit simulator!

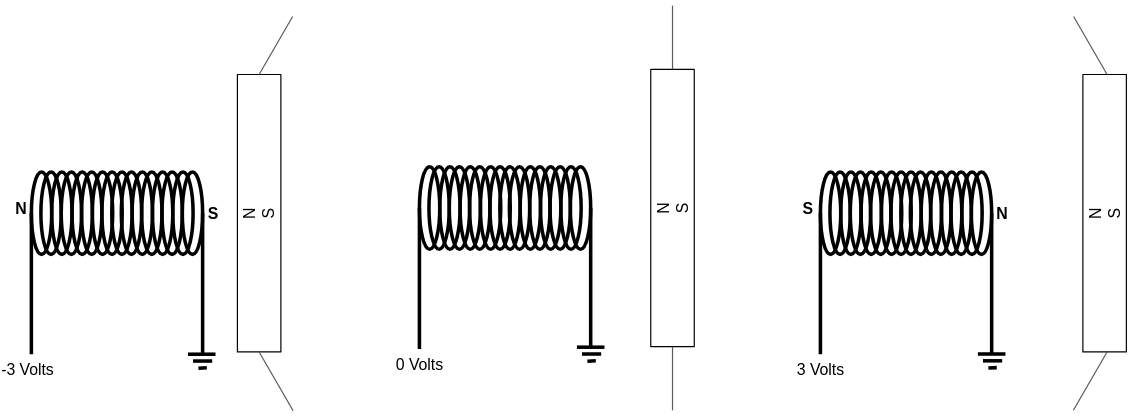

Everything is relative

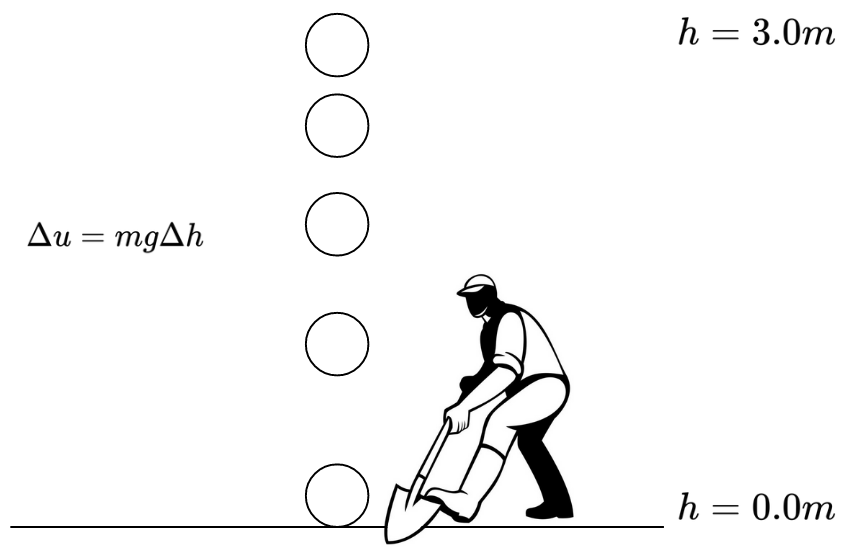

The very first term you encounter when exploring electricity is "voltage," so it’s essential to grasp its meaning before studying how transistors behave under different voltages. Formally, voltage is the potential energy difference between two points in a circuit. To understand this concept, let’s set aside electricity for a moment and think about heights.

Imagine lifting a heavy object from the ground. The higher you lift it, the more energy you expend (and the more tired you feel, right? That energy needs to be replenished, like by eating food!). According to the law of conservation of energy, energy cannot be created or destroyed, only transformed or transferred. So where does the energy you used to lift the object go?

Now, release the object and let it fall. It hits the ground with a bang, possibly breaking the ground—or itself. The higher the object was lifted, the greater the damage it causes. Clearly, energy is needed to create those noises and damages. But where did that energy come from?

You’ve guessed it! When you lifted the object, you transferred energy to it. When you let it fall, that stored energy was released. Physicists call this stored energy "potential energy." A raised object has the potential to do work (work is the result of energy being consumed), which is why it’s called potential energy!

If you remember high school physics, you’ll know that the potential energy of an object can be calculated using the formula: \(U=mgh\), where \(m\) is the mass of the object, \(h\) is its height above the ground, and \(g\) is the Earth's gravitational constant (approximately \(9.807 m/s^2\)).

According to this formula, when the object is on the ground (\(h=0\)), the potential energy is also \(U=0\). But here’s an important question: does that mean an object lying on the ground has no potential energy?

Actually, it does have potential energy! Imagine taking a shovel and digging a hole under the object. The object would fall into the hole, demonstrating that it still had the capacity to do work due to gravity.

The key point is this: the equation \(U=mgh\) represents the potential energy difference relative to a chosen reference point (in this case, the ground), not the absolute potential energy of the object. A more precise way to express this idea would be: \(\varDelta{u}=mg\varDelta{h}\).

This equation shows that the potential energy difference between two points \(A\) and \(B\) depends on \(mg\), the gravitational force, and \(\varDelta{h}\), the difference in height between the two points.

In essence, potential energy is relative!

{ width=350px }

{ width=350px }

The reason it takes energy to lift an object is rooted in the fact that massive bodies attract each other, as described by the universal law of gravitation: \(F=G\frac{m_1m_2}{r^2}\).

Similarly, a comparable law exists in the microscopic world, where electrons attract protons, and like charges repel each other. This interaction is governed by Coulomb's law: \(F=k_e\frac{q_1q_2}{r^2}\)

As a result, we also have the concept of "potential energy" in the electromagnetic world. It takes energy to separate two electric charges of opposite types (positive and negative), as you're working against the attractive electric force. When you pull them apart and then release them, they will move back toward each other, releasing the stored potential energy.

That's essentially how batteries work. They create potential differences by moving electrons to higher "heights" of potential energy. When you connect a wire from the negative pole of the battery to the positive pole, the electrons "fall" through the wire, releasing energy in the process.

When we talk about "voltage," we are referring to the difference in height or potential energy between two points. While we might not know the absolute potential energy of points A and B, we can definitely measure and understand the difference in potential (voltage) between them!

Pipes of electrons

We discussed that transistors are essentially three-way switches that control the flow between two input/output paths using a third input. When building a transistor circuit simulator—whether simulating the flow of water or electrons—it makes sense to begin by simulating the "pipes," the elements through which electrons will flow. In electrical circuits, these "pipes" are commonly referred to as wires.

Wires are likely to be the most fundamental and essential components of our circuit simulation software. As their name suggests, wires are conductors of electricity, typically made of metal, that allow different components to connect and communicate via the flow of electricity. We can think of wires as containers that hold electrons at specific voltage levels. If two wires with different voltage levels are connected, electrons will flow from the wire with higher voltage to the one with lower voltage.

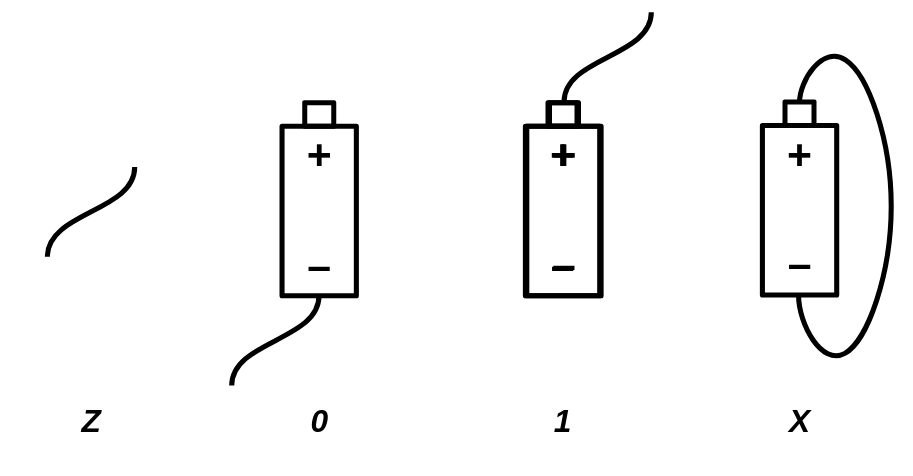

In digital circuits, transistors and other electronic components operate using two specific voltage levels: "zero" (commonly referred to as ground) and "one." It’s important to emphasize that the terms "zero" and "one" do not correspond to fixed or absolute values. Instead, "zero" acts as a reference point, while "one" represents a voltage level that is higher relative to "zero."

At first glance, it might seem that we could model a wire using a single boolean value: false to represent zero and true to represent one. However, this approach fails to account for all the possible scenarios that can arise in a circuit simulator.

For example, consider a wire in your simulator that isn’t connected to either the positive or negative pole of a battery. Would it even have a voltage level? Clearly, it would be neither 0 nor 1. Now imagine a wire that connects a high-voltage (1) wire to a low-voltage (0) wire. What would the voltage of this intermediary wire be? Electrons would start flowing from the higher-voltage wire to the lower-voltage wire, and as a result, the voltage of the intermediary wire would settle somewhere between 0 and 1.

In a digital circuit, connecting a 0 wire to a 1 wire is not always a good idea and could indicate a mistake in your circuit design, potentially causing a short circuit. Therefore, it is useful to consider a value for a wire that is either mistakenly or intentionally left unconnected (Free state) or connected to both 0 and 1 voltage sources simultaneously (Short-circuit/Unknown state).

Based on this explanation, a wire can be in four different states:

Zstate - The wire is free and not connected to anything.0state - The wire is connected to a ground voltage source and has 0 voltage.1state - The wire is connected to a 5.0V voltage source.Xstate - The wire's voltage cannot be determined because it is connected to both a 5.0V and 0.0V voltage source simultaneously.

Initially, a wire that is not connected to anything is in the Z state. When you connect it to a gate or wire that has a state of 1, the wire's state will also become 1. A wire can connect to multiple gates or wires at the same time. For example, if a wire already in the 1 state is connected to another source of 1, it will remain 1. However, the wire will enter the X state if it is connected to a wire or gate with a conflicting value. For instance, if the wire is connected to both 0 and 1 voltage sources simultaneously, its state will be X. Similarly, connecting any wire to an X voltage source will also result in the wire taking on the X state.

We can summarize all the possible scenarios in a custom table that defines the arithmetic of wire states:

| A | B | A + B |

|---|---|---|

| Z | Z | Z |

| 0 | Z | 0 |

| 1 | Z | 1 |

| X | Z | X |

| Z | 0 | 0 |

| 0 | 0 | 0 |

| 1 | 0 | X |

| X | 0 | X |

| Z | 1 | 1 |

| 0 | 1 | X |

| 1 | 1 | 1 |

| X | 1 | X |

| Z | X | X |

| 0 | X | X |

| 1 | X | X |

| X | X | X |

A wildcard version of this table would look like this:

| A | B | A + B |

|---|---|---|

| Z | x | x |

| x | Z | x |

| X | x | X |

| x | X | X |

| 0 | 0 | 0 |

| 1 | 1 | 1 |

| 0 | 1 | X |

| 1 | 0 | X |

Based on the explanation, we can model a wire as a Python class:

FREE = "Z"

UNK = "X"

ZERO = 0

ONE = 1

class Wire:

def __init__(self):

self._drivers = {}

self._assume = FREE

def assume(self, val):

self._assume = val

def get(self):

curr = FREE

for b in self._drivers.values():

if b == UNK:

return UNK

elif b != FREE:

if curr == FREE:

curr = b

elif b != curr:

return UNK

return curr if curr != FREE else self._assume

def put(self, driver, value):

is_changed = self._drivers.get(driver) != value

self._drivers[driver] = value

return is_changed

The code above models a wire as a Python class. By definition, a wire that is not connected to anything remains in the Z state. Using the put function, a driver (such as a battery or a gate) can apply a voltage to the wire. The final voltage of the wire is determined by iterating over all the voltages applied to it.

We store the voltage values applied to the wire in a dictionary to ensure that a single driver cannot apply two different values to the same wire.

The put function also checks whether there has been a change in the values applied to the wire. This will later help our simulator determine if the circuit has reached a stable state, where the values of all wires remain fixed.

In some cases—particularly in circuits containing feedback loops and recursion—it is necessary to assume that a wire already has a value in order to converge on a solution. For this purpose, we have designed the assume() function, which allows us to assign an assumed value to a wire if no gates have driven a value into it. If you don’t yet fully understand what the assume() function does, don’t worry—we’ll explore its usage in the next sections.

Magical switches

Now that we've successfully modeled wires, it's time to implement the second most important component of our simulator: the transistor.

Transistors are electrically controlled switches with three pins: base, emitter, and collector. These pins connect to other circuit elements through wires. The base pin acts as the switch’s controller—when there is a high potential difference between the base and collector pins, the emitter connects to the collector. Otherwise, the emitter remains unconnected, behaving like a floating wire in the Z state.

This key behavior means that turning off the transistor does not set the emitter to 0 but instead leaves it in the Z state.

We can also describe the wire arithmetic of a transistor using the following table:

| B | E | C |

|---|---|---|

| 0 | 0 | Z |

| 0 | 1 | Z |

| 1 | 0 | 0 (Strong) |

| 1 | 1 | 1 (Weak) |

The transistor we have been discussing so far is a Type-N transistor. The Type-N transistor turns on when the base wire is driven with a 1. There is also another type of transistor, known as Type-P, which turns on when the base wire is driven with a 0. The truth table for a Type-P transistor looks like this:

| B | E | C |

|---|---|---|

| 0 | 0 | 0 (Weak) |

| 0 | 1 | 1 (Strong) |

| 1 | 0 | Z |

| 1 | 1 | Z |

Assuming we define a voltage of 5.0V as 1 and a voltage of 0.0V as 0, a wire is driven with a strong 0 when its voltage is very close to 0 (e.g., 0.2V), and a strong 1 when its voltage is close to 5 (e.g., 4.8V). The truth is, the transistors we build in the real world aren't ideal, so they won't always provide strong signals. A signal is considered weak when it's far from 0.0V or 5.0V. For example, a voltage of 4.0V could be considered a weak 1, and a voltage of 1.0V would be considered a weak 0, whereas 4.7V could be considered a strong 1 and 0.3V a strong 0. Type-P transistors, when built in the real world, are very good at producing strong 0 signals, but their 1 signals tend to be weak. On the other hand, Type-N transistors produce strong 1 signals, but their 0 signals are weak. By using both types of transistors together, we can build logic gates that provide strong outputs in all cases.

The Transistor

In our digital circuit simulator, we’ll have two different types of components: primitive components and components that are made of primitives. We’ll define components as primitives when they can’t be described as a group of smaller primitives.

For example, we can simulate Type-N and Type-P transistors as primitive components, since everything else can be constructed by combining Type-N and Type-P transistors together.

class NTransistor:

def __init__(self, in_base, in_collector, out_emitter):

self.in_base = in_base

self.in_collector = in_collector

self.out_emitter = out_emitter

def update(self):

b = self.in_base.get()

if b == ONE:

return self.out_emitter.put(self, self.in_collector.get())

elif b == ZERO:

return self.out_emitter.put(self, FREE)

elif b == UNK:

return self.out_emitter.put(self, UNK)

else:

return True # Trigger re-update

class PTransistor:

def __init__(self, in_base, in_collector, out_emitter):

self.in_base = in_base

self.in_collector = in_collector

self.out_emitter = out_emitter

def update(self):

b = self.in_base.get()

if b == ZERO:

return self.out_emitter.put(self, self.in_collector.get())

elif b == ONE:

return self.out_emitter.put(self, FREE)

elif b == UNK:

return self.out_emitter.put(self, UNK)

else:

return True # Trigger re-update

Our primitive components are classes with an update() function. The update() function is called whenever we want to calculate the output of the primitive based on its inputs. As a convention, we will prefix the input and output wires of our components with in_ and out_, respectively.

The update() function of our primitive components will also return a boolean value, which indicates whether the element needs to be updated again. Sometimes, the inputs of a component might not be ready when the update() function is called. For example, in the case of transistors, if the base wire is in the Z state, we assume there is another transistor that needs to be updated before the current transistor can calculate its output. By returning this boolean value, we inform our circuit emulator that the transistor is not "finalized" yet, and the update() function needs to be called again before determining that all component outputs have been correctly calculated and the circuit is stabilized.

Additionally, remember that the put() function of the Wire class also returns a boolean value. This value indicates whether the driver of that wire has placed a new value on the wire. A new value on a wire means there has been a change in the circuit, and the entire circuit needs to be updated again.

The Circuit

Now, it would be useful to have a data structure for keeping track of the wires and transistors allocated in a circuit. We will call this class Circuit. It will provide methods for creating wires and adding transistors. The Circuit class is responsible for calling the update() function of the primitive components and will allow you to calculate the values of all the wires in the network.

class Circuit:

def __init__(self):

self._wires = []

self._comps = []

self._zero = self.new_wire()

self._zero.put(self, ZERO)

self._one = self.new_wire()

self._one.put(self, ONE)

def one(self):

return self._one

def zero(self):

return self._zero

def new_wire(self):

w = Wire()

self._wires.append(w)

return w

def add_component(self, comp):

self._comps.append(comp)

def num_components(self):

return len(self._comps)

def update(self):

has_changes = False

for t in self._comps:

has_changes = has_changes | t.update()

return has_changes

def stabilize(self):

while self.update():

pass

The update() method of the Circuit class calculates the values of the wires by iterating through the transistors and calling their update method. In circuits with feedback loops, a single iteration of updates may not be sufficient, and multiple iterations may be needed before the circuit reaches a stable state. To address this, we introduce an additional method specifically designed for this purpose: stabilize(). This method repeatedly performs updates until no changes are observed in the wire values, meaning the circuit has stabilized.

Our Circuit class also provides global zero() and one() wires, which can be used by components requiring fixed 0 and 1 signals. These wires function like battery poles in our circuits.

Electronic components can be defined as functions that take a circuit as input and add wires and transistors to it. Let’s explore the implementation details of some of them!

Life in a non-ideal world

Digital circuits are essentially logical expressions that are automatically evaluated by the flow of electrons through what we refer to as gates. Logical expressions are defined using zeros and ones, but as we have seen, wires in an electronic circuit are not always guaranteed to be either 0 or 1. Therefore, we must redefine our gates and determine their output in cases where the inputs are faulty.

Consider a NOT gate as an example. In an ideal world, its truth table would be as follows:

| A | NOT A |

|---|---|

| 0 | 1 |

| 1 | 0 |

However, the real world is not ideal, and wires connected to electronic logic gates can have unexpected voltages. Since a wire in our emulation can have four different states, our logic gates must be able to handle all four. The following is the definition of a NOT gate using our wire arithmetic. If the input to the NOT gate is Z or X, the output will be the faulty state X.

| A | NOT A |

|---|---|

| 0 | 1 |

| 1 | 0 |

| Z | X |

| X | X |

There are two ways to simulate gates in our software. We can either implement them using plain Python code as primitive components, similar to transistors, or we can construct them as a circuit of transistors. The following is an example of a NOT gate implemented using the first approach:

class Not:

def __init__(self, wire_in, wire_out):

self.wire_in = wire_in

self.wire_out = wire_out

def update(self):

v = self.wire_in.get()

if v == FREE:

self.wire_out.put(self, UNK)

elif v == UNK:

self.wire_out.put(self, UNK)

elif v == ZERO:

self.wire_out.put(self, ONE)

elif v == ONE:

self.wire_out.put(self, ZERO)

We can also test out implementation:

if __name__ == '__main__':

inp = Wire.zero()

out = Wire()

gate = Not(inp, out)

gate.update()

print(out.get())

The NOT gate modeled as a primitive component is accurate and functions as expected. However, we know that a NOT gate is actually built from transistors, and it might be more interesting to model it using a pair of transistors rather than cheating by emulating its high-level behavior with Python code.

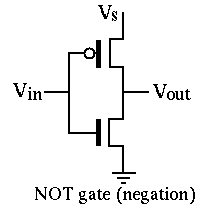

The following is an example of a NOT gate constructed using a P-type and an N-type transistor:

def Not(circuit, inp, out):

circuit.add_component(PTransistor(inp, circuit.one(), out))

circuit.add_component(NTransistor(inp, circuit.zero(), out))

As you know, an N-type transistor connects its source pin to its drain pin when the voltage on its gate is higher than the voltage on its drain. So, when the inp wire is driven with 1, the output gets connected to the circuit.zero() wire, causing out to hold a 0 signal. Notice that the P-type transistor is off when inp is 1, so the circuit.one() wire will not get connected to the output pin. If that were the case, we would get a short circuit, causing the out signal to become a faulty (X)`.

Likewise, when inp is 0, the P-type transistor turns on and connects the output to circuit.one(), while the N-type transistor turns off, leaving the output unconnected to the ground.

NOT gates are probably the simplest components you can build using the current primitive elements provided by our simulator. Now that we’re familiar with transistors, it’s time to expand our component set and build some of the most fundamental logic gates. In addition to NOT gates, you’ve likely heard of AND and OR gates, which are a bit more complex—mainly because they take more than one input. Here’s their definition:

AND gate: outputs 0 when at least one of the inputs is 0, and gets 1 when all of the inputs are 1. Otherwise the output is faulty (X).

| A | B | A AND B |

|---|---|---|

| 0 | * | 0 |

| * | 0 | 0 |

| 1 | 1 | 1 |

| X |

OR gate: outputs 1 when at least one of the inputs is 1, and gets 0 only when all of the inputs are 0. Otherwise the output is unknown (X).

| A | B | A OR B |

|---|---|---|

| 1 | * | 1 |

| * | 1 | 1 |

| 0 | 0 | 0 |

| X |

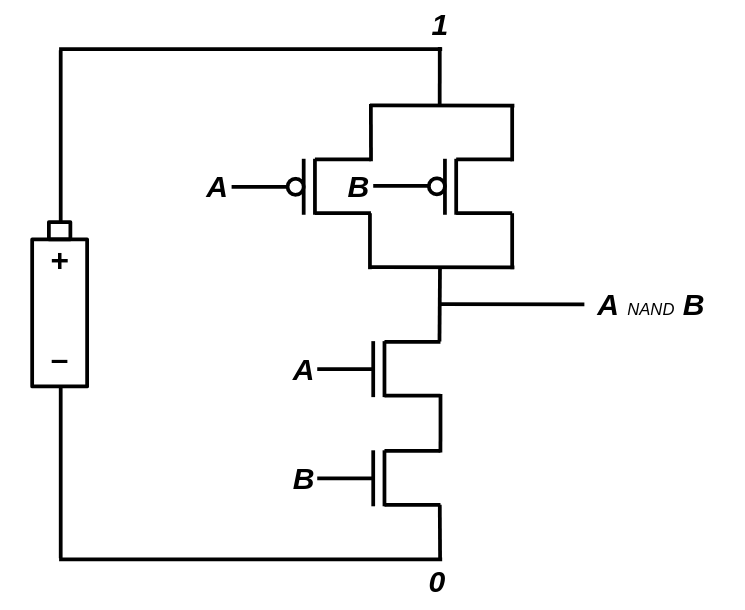

Mother of the gates

A NAND gate is a logic gate that outputs 0 if and only if both of its inputs are 1. It is essentially an AND gate with its output inverted. It can be proven that all the basic logic gates (AND, OR, NOT) can be built using different combinations of this single gate:

- \(NOT(x) = NAND(x, x)\)

- \(AND(x, y) = NOT(NAND(x, y)) = NAND(NAND(x, y), NAND(x, y))\)

- \(OR(x, y) = NAND(NOT(x), NOT(y)) = NAND(NAND(x, x), NAND(y, y))\)

It is the "mother gate" of all logic circuits. Although building everything with NAND gates would be very inefficient in practice, for the sake of simplicity, we'll stick to NAND gates and try to construct other gates by connecting them together.

It turns out that we can build NAND gates with strong and accurate output signals using 4 transistors (2 Type-N and 2 Type-P). Let's prototype a NAND gate using our simulated N/P transistors!

def Nand(circuit, in_a, in_b, out):

inter = circuit.new_wire()

circuit.add_component(PTransistor(in_a, circuit.one(), out))

circuit.add_component(PTransistor(in_b, circuit.one(), out))

circuit.add_component(NTransistor(in_a, circuit.zero(), inter))

circuit.add_component(NTransistor(in_b, inter, out))

Now, other primitive gates can be defined as combinations of NAND gates. Take the NOT gate as an example. Here is a third way we can implement a NOT gate (So far, we have implemented a NOT gate in two ways: 1. Describing its behavior through plain Python code, and 2. By connecting a pair of Type-N and Type-P transistors):

def Not(circuit, inp, out):

Nand(circuit, inp, inp, out)

Go ahead and implement the other primitive gates using the NAND gate we just defined. After that, we can start creating useful circuits from these gates!

def And(circuit, in_a, in_b, out):

not_out = circuit.new_wire()

Nand(circuit, in_a, in_b, not_out)

Not(circuit, not_out, out)

def Or(circuit, in_a, in_b, out):

not_out = circuit.new_wire()

Nor(circuit, in_a, in_b, not_out)

Not(circuit, not_out, out)

def Nor(circuit, in_a, in_b, out):

inter = circuit.new_wire()

circuit.add_component(PTransistor(in_a, circuit.one(), inter))

circuit.add_component(PTransistor(in_b, inter, out))

circuit.add_component(NTransistor(in_a, circuit.zero(), out))

circuit.add_component(NTransistor(in_b, circuit.zero(), out))

An XOR gate is another incredibly useful gate that comes in handy when building circuits that can perform numerical additions. The XOR gate outputs 1 only when the inputs are unequal, and outputs 0 when they are equal. XOR gates can be built from AND, OR, and NOT gates: \(Xor(x,y) = Or(And(x, Not(y)), And(Not(x), y))\). However, since XOR gates will be used frequently in our future circuits, it makes more sense to provide a transistor-level implementation for them, as this will require fewer transistors!

def Xor(circuit, in_a, in_b, out):

a_not = circuit.new_wire()

b_not = circuit.new_wire()

Not(circuit, in_a, a_not)

Not(circuit, in_b, b_not)

inter1 = circuit.new_wire()

circuit.add_component(PTransistor(b_not, circuit.one(), inter1))

circuit.add_component(PTransistor(in_a, inter1, out))

inter2 = circuit.new_wire()

circuit.add_component(PTransistor(in_b, circuit.one(), inter2))

circuit.add_component(PTransistor(a_not, inter2, out))

inter3 = circuit.new_wire()

circuit.add_component(NTransistor(in_b, circuit.zero(), inter3))

circuit.add_component(NTransistor(in_a, inter3, out))

inter4 = circuit.new_wire()

circuit.add_component(NTransistor(b_not, circuit.zero(), inter4))

circuit.add_component(NTransistor(a_not, inter4, out))

Sometimes, we simply need to connect two different wires. Instead of creating a new primitive component for that purpose, we can use two consecutive NOT gates. This will act like a simple jumper! We'll call this gate a Forward gate:

def Forward(circuit, inp, out):

tmp = circuit.new_wire()

Not(circuit, inp, tmp)

Not(circuit, tmp, out)

Hello World circuit!

The simplest digital circuit we might consider a "computer" is one that can perform basic arithmetic, like adding two numbers together. In digital circuits, information is represented using binary signals—0s and 1s. So, when we talk about adding numbers in a digital circuit, we're working with binary numbers.

To start with, let's focus on a very basic example: a circuit that can add two one-bit binary numbers. A one-bit number can only be either a 0 or a 1, so adding them together can yield one of three possible results: 0 + 0 = 0, 0 + 1 = 1, and 1 + 1 = 10 (which is 2 in decimal). Notice that the result of adding two one-bit numbers is always a two-bit number, because 1 + 1 produces a carry, which means we need two bits to store the result.

To design such a circuit, one of the most effective approaches is to start by figuring out what the output should be for each possible combination of inputs. Since there are two inputs (each one-bit numbers), there are four possible input combinations: 00, 01, 10, and 11. For each of these combinations, we can determine what the output should be. Since the result is always a two-bit number, we can break the circuit into two smaller subcircuits: one that calculates the sum bit (the least significant bit) and one that calculates the carry bit (the more significant bit). Each subcircuit works independently to compute its corresponding part of the result.

In this way, we approach building the circuit step by step, using logic gates to implement the required operations for each input combination.

| A | B | First digit | Second digit |

|---|---|---|---|

| 0 | 0 | 0 | 0 |

| 0 | 1 | 1 | 0 |

| 1 | 0 | 1 | 0 |

| 1 | 1 | 0 | 1 |

The relation of the second digit with A and B is very familiar; it's essentially an AND gate! Try to figure out how the first digit can be calculated by combining primitive gates. (Hint: It outputs 1 only when A is 0 AND B is 1, or A is 1 AND B is 0.)

Answer: It's an XOR gate! (\(Xor(x, y) = Or(And(x, Not(y)), And(Not(x), y))\)), and here is the Python code for the entire thing:

def HalfAdder(circuit, in_a, in_b, out_sum, out_carry):

Xor(circuit, in_a, in_b, out_sum)

And(circuit, in_a, in_b, out_carry)

What we have just built is known as a half-adder. With a half-adder, you can add 1-bit numbers together. You might think that we can build multi-bit adders by stacking multiple half-adders in a row, but that's not entirely correct. Recall that the addition algorithm we learned in primary school also applies to binary numbers. Imagine we want to add the binary numbers 1011001 (89) and 111101 (61) together. Here’s how it works:

1111 1

1011001

+ 111101

-----------

10010110

By examining the algorithm, we can see that for each digit, the addition involves three bits (not just two). In addition to the original bits, there is also a third carry bit from the previous addition that must be considered. Therefore, to design a multi-bit adder, we need a circuit that can add three one-bit numbers together. Such a circuit is known as a full-adder, and the third number is often referred to as the carry bit. Here’s the truth table for a three-bit adder:

| A | B | C | D0 | D1 |

|---|---|---|---|---|

| 0 | 0 | 0 | 0 | 0 |

| 1 | 0 | 0 | 1 | 0 |

| 0 | 1 | 0 | 1 | 0 |

| 1 | 1 | 0 | 0 | 1 |

| 0 | 0 | 1 | 1 | 0 |

| 1 | 0 | 1 | 0 | 1 |

| 0 | 1 | 1 | 0 | 1 |

| 1 | 1 | 1 | 1 | 1 |

Building a full-adder is not that challenging. You can use two half-adders to compute the sum bit and then take the OR of the carry outputs to obtain the final carry bit.

def FullAdder(circuit, in_a, in_b, in_carry, out_sum, out_carry):

sum_ab = circuit.new_wire()

carry1 = circuit.new_wire()

carry2 = circuit.new_wire()

HalfAdder(circuit, in_a, in_b, sum_ab, carry1)

HalfAdder(circuit, sum_ab, in_carry, out_sum, carry2)

Or(circuit, carry1, carry2, out_carry)

Once we have a full-adder ready, we can proceed with building multi-bit adders. For example, to create an 8-bit adder, we need to connect eight full-adders in a row. The carry output of each adder serves as the carry input for the next, mimicking the addition algorithm we discussed earlier.

def Adder8(circuit, in_a, in_b, in_carry, out_sum, out_carry):

carries = [in_carry] + [circuit.new_wire() for _ in range(7)] + [out_carry]

for i in range(8):

FullAdder(

circuit,

in_a[i],

in_b[i],

carries[i],

out_sum[i],

carries[i + 1],

)

Congratulations! We just added two 8-bit numbers using nothing but bare transistors. Before continuing our journey toward more complex circuits, let's ensure that our simulated model of the 8-bit adder is functioning correctly. If the 8-bit adder works properly, there is a high chance that the other gates are also functioning as expected.

def num_to_wires(circuit, num):

wires = []

for i in range(8):

bit = (num >> i) & 1

wires.append(circuit.one() if bit else circuit.zero())

return wires

def wires_to_num(wires):

out = 0

for i, w in enumerate(wires):

if w.get() == ONE:

out += 2**i

return out

if __name__ == "__main__":

for x in range(256):

for y in range(256):

circuit = Circuit()

wires_x = num_to_wires(circuit, x)

wires_y = num_to_wires(circuit, y)

wires_out = [Wire() for _ in range(8)]

Adder8(circuit, wires_x, wires_y, circuit.zero(), wires_out, circuit.zero())

circuit.update()

out = wires_to_num(wires_out)

if out != (x + y) % 256:

print("Adder is not working!")

Here, we are checking if the outputs are correct for all possible inputs. We have defined two auxiliary functions, num_to_wires and wires_to_num, to convert numbers into a set of wires that can connect to an electronic circuit, and vice versa.

When addition is subtraction

So far, we have been able to implement the addition operation by combining N and P transistors. Our adder is limited to 8 bits, meaning that the input and output values are all in the range \([0,255]\). If you try to add two numbers whose sum exceeds 255, you will still get a result in the range \([0,255]\). This happens because a number larger than 255 cannot be represented by 8 bits, and an overflow occurs. Upon closer inspection, you’ll notice that what we have designed isn’t the typical addition operation we are used to in elementary school mathematics; instead, it’s addition in a finite field. This means the addition results are taken modulo 256.

\(a \oplus b = (a + b) \mod 256\)

It is good to know that finite-fields have interesting properties:

- \((a \oplus b) \oplus c = a \oplus (b \oplus c)\)

- For every non-zero number \(x \in \mathbb{F}\), there is a number \(y\), where \(x \oplus y = 0\). \(y\) is known as the negative of \(x\).

In a finite field, the negative of a number can be calculated by subtracting that number from the field size (in this case, the size of our field is \(2^8=256\)). For example, the negative of \(10\) is \(256-10=246\), so \(10 \oplus 246 = 0\).

Surprisingly, the number \(246\), behaves exactly like \(-10\). Try adding \(246\) to \(15\). You will get \(246 \oplus 15 = 5\) which is the same as \(15 + (-10)\)! This has an important implication: we can perform subtraction without designing a new circuit! All we need to do is negate the number. Calculating the negative of a number is like taking the XOR of that number (Which gives \(255 - a\)), and then adding \(1\) to it (Which results in \(256 - a\), our definition of negation). This is known as the two's complement form of a number.

It’s incredible to see that we can build electronic machines capable of adding and subtracting numbers by simply connecting a bunch of transistors together! The good news is that we can go even further and design circuits that can perform multiplication and division, using the same approach we used for designing addition circuits. The details of multiplication and division circuits are beyond the scope of this book, but you are strongly encouraged to study them on your own!

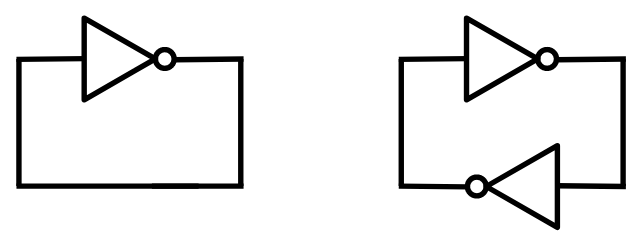

Not gates can be fun too!

If you remember, we discussed that you can't build interesting structures using only a single type of single-input/single-output component (such as NOT gates). In fact, we argued that using just them, we can only create domino-like structures, where a single cause traverses through the components until reaching the last one. However, that's not entirely true: what if we connect the last component of the chain to the first one? This creates a loop, which is definitely something new. Assuming two consecutive NOT gates cancel each other out, we can build two different kinds of NOT loops:

After analyzing both possible loops, you will soon understand that the one with a single NOT gate is unstable. The voltage of the wire can be neither 0 nor 1. It creates a logical paradox, similar to the statement: This sentence is not true. The statement can be neither true nor false!

Practically, if you build such a circuit, it may oscillate rapidly between possible states, or the wire's voltage may settle at a value between the logical voltages of 0 and 1.

On the other hand, the loop with two NOT gates can achieve stability. The resulting circuit has two possible states: either the first wire is 1 and the second wire is 0, or the first wire is 0 and the second wire is 1. It will not switch between these states automatically. If you build such a circuit using real components, the initial state will be somewhat random. A slight difference in the transistor conditions may cause the circuit to settle into one of the states and remain there.

Try to remember

So far, we have been experimenting with stateless circuits—circuits that do not need to store or remember anything to function. However, circuits without memory are severely limited in their capabilities. To unlock their full potential, we need to explore how to store data within a digital circuit and maintain it over time. This is one of the most critical steps before we can build a computer, as computers are essentially machines that manipulate values stored in some kind of memory. Without memory, a computer cannot perform complex tasks or execute meaningful programs.

As a child, you may have tried to leave a light switch in a "middle" position. If the switch was well-made, you probably found it frustratingly difficult to do! The concept of memory arises when a system with multiple possible states can only stabilize in a single state at a time. Once it becomes stable, it can only change through an external force. In this sense, a light switch can function as a single-bit memory cell.

Another silly example: imagine a piece of paper—it remains stable in its current state. If you set it on fire, it gradually changes until it is completely burned, after which it stabilizes again. Keeping the paper in a "half-burned" state is not easy! You could consider the paper as a crude single-bit memory cell, but it has a major flaw: once burned, it cannot return to its original state.

Fortunately, there are ways to build circuits with multiple possible states, where only one state remains stable at a time. The simplest example is a circuit that forms a loop by connecting two NOT gates to each other. This setup involves two wires: if the first wire is 1, the second wire will be 0, and the circuit will stabilize in that configuration (and vice versa).

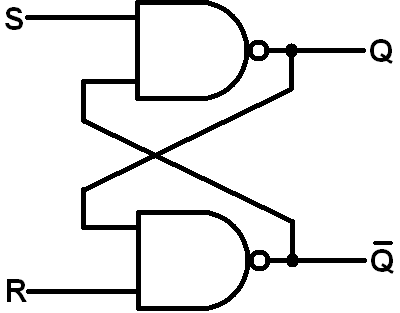

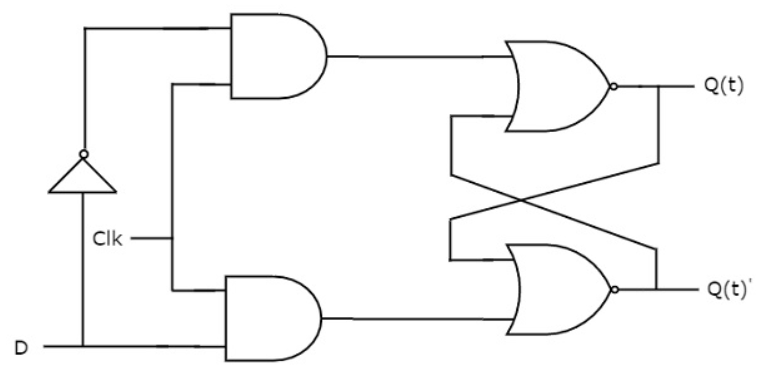

The problem with a simple NOT gate loop is that it cannot be controlled externally—it lacks an input mechanism to change its state. To fix this, we can replace the NOT gates with logic gates that accept two inputs instead of one. For example, using NAND gates instead allows us to introduce external control. By providing voltages to the extra input pins, a user can modify the internal state of the loop. This type of circuit is known as an SR latch:

Now, the user can set the latch to the first or second state by setting S=1 and R=0, or S=0 and R=1, respectively. The magic lies in the fact that the circuit will stably remain in the latest state, even after both S and R inputs are set to zero!

Here you can find an example of a SR latch implemented in Python:

def SRLatch(circuit, in_r, in_s, out_data, initial=0):

neg_out = circuit.new_wire()

Nor(circuit, in_r, neg_out, out_data)

Nor(circuit, in_s, out_data, neg_out)

neg_out.assume(1 - initial)

out_data.assume(initial)

Notice how we ingeniously feed two set/reset inputs into something that is essentially a NOT loop! Now, there’s something tricky about simulating a NOR/NOT loop: How can the first NOR gate function when neg_out is still not calculated? Or similarly, how can the second NOR gate calculate neg_out when out_data is still not calculated? There are two approaches we can use to resolve the chicken-and-egg problem in our simulation:

- Give up and attempt to simulate a memory-cell component without performing low-level transistor simulations. This would require us to define a new Primitive Component (similar to N/P transistors), which includes an

update()function that mimics the expected behavior of a latch. - Hint the simulator with the initial values of wires, and let the simulator to settle in an stable state by running the update function of the transistors for a few iterations and stopping the iterations after the wire values stop changing (Meaning that the system has entered a stable state).

That’s where the assume function we defined for our Wire class comes in handy. The assume function essentially hints to the simulator the initial value(s) of the wires in our memory cell, making it stable. In fact, if you don’t call these assume functions when defining the gates, the simulator won’t be able to simulate it, and the output of both NOR gates would become X. Assumption is only necessary when we want to initialize the circuit in a stable state for the first time. After that, the circuit will ignore the initial assumed values and will start working as expected. Note: In the real world, the memory cell randomly settles into one of the set/reset states due to environmental noise and slight differences between the transistors.

A more user-friendly latch

SR latches are perfect examples of memory cells since they can store a single bit of data. However, the unusual aspect of SR latches is that they require two inputs to determine whether to set them to a set (1) or reset (0) state. To set the latch to a 1 (set) state, you would feed it S=1 & R=0, and to reset it to 0 (reset) state, you would feed it S=0 & R=1. But what if we only had a single wire, D=0/1, and wanted the latch to remember the value stored in that wire? This is where the D-latch, a second type of latch, comes in handy.